Amarnath Murugan

Graphics Programmer | Game Developer | Researcher

Hi there! I’m a Graphics Programmer at Marmoset, where I work on Toolbag - a software for 3D artists with rendering, texturing & baking features. I graduated from University of Utah in 2024 with a Masters in Computing and a specialization in Graphics & Visualization. My research at the U revolved around a novel approach to 3d modelling with Prof Cem Yuksel as my advisor.

Previously, I conducted research on storytelling, education, and novel interaction techniques for AR/VR at the IMXD Lab, IIT Bombay, under Prof Jayesh Pillai. I also worked as the Technical Director of Manhole Collective, the creators of the real-time animated short film Manhole, which won third place in Unreal’s Short film challenge in India and was screened at the prestigious Annecy film festival.

Demo Reel

Selected Publications

-

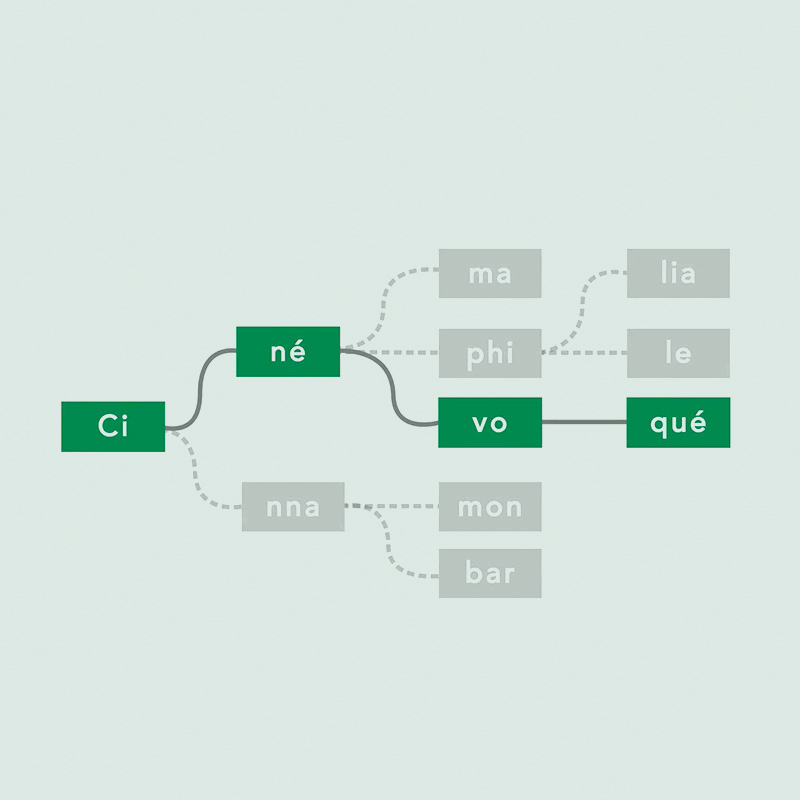

Cinévoqué: Development of a Passively Responsive Framework for Seamless Evolution of Experiences in Immersive Live-Action MoviesIn 25th ACM Symposium on Virtual Reality Software and Technology 2019

Cinévoqué: Development of a Passively Responsive Framework for Seamless Evolution of Experiences in Immersive Live-Action MoviesIn 25th ACM Symposium on Virtual Reality Software and Technology 2019 -

Exploring effect of different external stimuli on body association in vrIn 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) 2020

Exploring effect of different external stimuli on body association in vrIn 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) 2020 -

Towards Avatars for Remote Communication using Mobile Augmented RealityIn 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) 2021

Towards Avatars for Remote Communication using Mobile Augmented RealityIn 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) 2021